Error: No layouts found

These days, you would be hard-pressed to find a bank, supermarket or retailer that still relies on paper records for transactions. But remarkably, one in five physicians still doesn’t use electronic medical records (EMRs), and almost half of those using EMRs don’t use these systems to their fullest, according to the U.S. Department of Health and Human Services.

A similar disconnect can even be found in academic medicine. “Most healthcare data are stored in silos, with separate and incompatible systems for patient records, clinical research findings, genomic data and so on,” says Parsa Mirhaji, M.D., Ph.D., director of clinical research informatics at Einstein and Montefiore and a research associate professor of systems & computational biology at Einstein. “This is true within institutions and between institutions. We’re not making full use of our biomedical data, and this greatly hinders discovery and innovation.”

But with the advent of informatics—using computer systems to create, store, manipulate and share information—things are changing quickly. Leading academic medical centers have started building the infrastructure that allows findings from each research study and patient encounter to be systematically captured, assessed and—most importantly—used to improve patient care.

To support such an effort at Einstein and Montefiore, Dr. Mirhaji is creating a “semantic data lake”—an integrated reservoir that collects all data flowing into the two institutions, with links to a wide variety of outside resources, from PharmGKB (a databank of how variations in human genetics lead to variations in drug responses) to UMLS (the National Institute of Health’s Unified Medical Language System) to OMIM (the Online Mendelian Inheritance in Man, a continuously updated catalogue of human genes and genetic disorders and traits).

The semantic data lake’s purpose is not just to gather data. It also defines, contextualizes, annotates, characterizes and indexes data—the “semantic” aspect of the data lake. Providing context and meaning to each bit of data should help investigators in several ways, allowing them to make new connections between genomic data and clinical phenomena, link environmental exposures to diseases, develop “smart” applications for clinical decision-making, create personalized therapies and assess community health needs in real time, to cite a

few examples.

Big Data’s potential is already evident in several places around Montefiore. One is the division of critical-care medicine, which uses the power of clinical research informatics to redefine the treatment of acute respiratory failure (ARF), a common type of organ failure in the hospital associated with high mortality and loss of function.

ARF usually affects critically ill patients suffering from acute illnesses such as pneumonia or from chronic health conditions. It’s difficult to predict which lung patients will develop ARF and when they’ll develop it. All too often, clinicians are left to deal with ARF after it culminates in respiratory distress, when supportive therapy on a mechanical ventilator must be started immediately. A 2010 study of older patients with ARF on mechanical ventilation in intensive care units in the U.S., published in the Journal of the American Medical Association, found that 30 percent of patients die within six months.

“There’s no single sign or symptom that announces the onset of ARF in its early phases,” says Michelle Ng Gong, M.D., a professor of medicine (critical care) and of epidemiology & population health at Einstein and director of critical-care research at Montefiore. “Rather, there’s usually a subtle pattern of abnormalities, none of which on its own is enough to raise a red flag until very late in the course of the illness.”

Try as they might, even the most experienced physicians have a hard time discerning these patterns. But an informatics tool called predictive analytics shows promise for doing so.

Michelle Ng Gong, M.D., right, with first- year internal medicine residents Andre Bryan, M.D., and Dahlia Townsend, M.D.

Several years ago, Dr. Gong and her colleagues started scouring the EMRs of thousands of patients who ultimately needed mechanical ventilation (the most intensive supportive therapy for ARF). The goal: to find clinical patterns that might predict the onset of lung failure. The researchers identified 44 key clinical variables—including heart rate, oxygen saturation and hemoglobin levels—which were inserted into a prediction model called APPROVE (for Accurate Prediction of Prolonged Ventilation score). A retrospective validation study involving more than 34,000 patients showed that APPROVE was better than most existing tools at predicting the onset of ARF.

As a practical matter, clinicians would be overwhelmed trying to monitor and analyze dozens of constantly changing clinical variables involving the patients they care for. So APPROVE is being incorporated into Montefiore’s EMR system, where its algorithm will run silently in the background, periodically analyzing each patient’s clinical data for early warning signs of ARF.

“We have an awful lot of data on our patients,” says Dr. Gong. “APPROVE will make those data more transparent and help make clinicians more aware of changing, and potentially dangerous, situations. APPROVE won’t capture everyone—some patients are in respiratory distress the minute they present in the emergency department. But in a hospital that has more than 600 inpatients, it allows our critical-care team to focus our attention on those patients who seem to be at highest risk.”

The companion to the APPROVE early warning system is PROOFcheck (Prevention of Organ Failure checklist). Once APPROVE signals that a patient is at risk for ARF, the EMR will present clinicians with a customized list of best practices for limiting lung injury in that patient. The team is now running a clinical trial, funded by the National Heart, Lung, and Blood Institute, that will test whether using PROOFcheck improves clinical outcomes. Dr. Mirhaji’s semantic data lake provides the Big Data platform allowing models such as APPROVE and PROOFcheck to be developed and integrated into the healthcare delivery process.

The PROOFcheck clinical trial will also collect feedback—drawn from physicians and clinical records—to learn what happens after the checklist is generated. The checklists are recommendations rather than instructions. The goal is to learn whether clinicians follow the checklist and if not why not, so the system can continuously learn from these clinical interactions.

Dr. Gong’s primary motivation is to save lives, but her work may also save money. “Care is extremely expensive in the ICU [intensive care unit], where ARF is treated,” says Dr. Gong. “ICU patients with ARF tend to have the longest stays and often need to be readmitted to the hospital after they’re discharged. If we can prevent just a few of these patients from progressing to ARF, we could dramatically improve their outcomes and reduce hospitalization costs for both the patient and the hospital.”

By linking several heart conditions to specific genetic defects, cardiology was among the first medical subspecialties to tap the potential of modern genetics. Today, for example, when a young patient is suspected of having a potentially fatal arrhythmia called long QT syndrome, testing 16 different genes will usually find a telltale genetic variant in one of them—confirming the diagnosis and informing treatment.

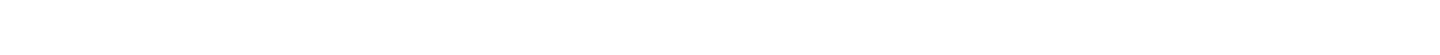

“We’ll find a clinically important variant in about 75 percent of patients with long QT,” says Thomas V. McDonald, M.D., a professor of medicine (cardiology) and of molecular pharmacology and co-director of the Montefiore Einstein Center for CardioGenetics. “The challenge is determining the cause of the syndrome in the other 25 percent.”

Thomas V. McDonald, M.D., in his lab.

Clues sometimes arise through expanded testing that involves sequencing a patient’s exome. This subset of the genome consists of DNA segments that code for proteins (i.e., our 20,000 or so genes). “When we’ve done this on a research basis, we’ve found up to 800,000 variants—changes from what is considered the normal exome—in a single patient. That will sometimes lead us to a well-known deleterious mutation. But most of the time, we don’t know if the genetic variants have anything to do with the patient’s condition,” says Dr. McDonald.

The exome accounts for just 1.5 percent of the genome. If exome sequencing doesn’t reveal genes that influence disease, the next step—looking for variants in the whole genome and its three billion base pairs—is even more daunting. The whole genome encompasses the vast majority of our DNA that does not code for genes but that can affect our health.

In 2008, to provide an overview of all human genetic variation, the 1000 Genomes Project began sequencing the whole genomes of 1,000 people around the globe. After the sequencing of 2,500 individual genomes was completed last year, the project found that the average person has 3.5 million to 5 million variants from what is considered a “normal” genome.

“What’s needed are bioinformatics and analytics systems that can sift through all the relevant data, try to make sense of them and then deliver a report directly to the patient’s electronic medical record,” says Dr. McDonald. “And you’d want this to be fully automated, so that the clinician simply has to draw a blood sample, send it to the lab and wait for the magic to happen. This is just in my mind at this stage, but I think we’ll get there.”

Dr. McDonald is working with Dr. Mirhaji and the software giant Microsoft to bake this precision-medicine approach into an app available at the point of care—not only for cardiogenetics but also for other specialties such as pediatrics and cancer.

Parsa Mirhaji, M.D., Ph.D.

Einstein’s and Montefiore’s Big Data initiatives extend well beyond the Bronx. Both institutions participate in the New York City Clinical Data Research Network (NYCCDRN), which is linking clinical researchers throughout the city in an effort to conduct health-outcomes studies more efficiently and at lower cost. In just its first year, the NYCCDRN has already gathered clinical data on more than four million patients, a number expected to reach six million by next year. This mammoth network will give researchers access to larger and more-diverse study populations.

NYCCDRN and its 10 sister networks around the country make up PCORnet—the National Patient-Centered Clinical Research Network. One of PCORnet’s aims is to foster research into some of the 7,000 rare diseases that collectively affect more than 25 million Americans.

PCORnet will also help in the study of common diseases, which often require patient enrollments far beyond the scope of a single medical center. For PCORnet’s first research project, the nation’s 11 CDRNs are participating in a study of heart disease patients to compare the safety and effectiveness of low- and high-dose aspirin for preventing heart attack and stroke. Doctors have prescribed aspirin to such patients for decades, but the ideal dose still isn’t known.

It’s hard to imagine anyone more qualified than Dr. Mirhaji to lead Einstein’s and Montefiore’s Big Data initiatives. A native of Iran, Dr. Mirhaji first became interested in computers while studying medicine at Tehran University, starting in the late 1980s. At the time, finding a computer to connect to medical databases outside the insular republic was nearly impossible. With nowhere to turn for technical support, he taught himself Unix, an early operating system for computer networks, and built the necessary Internet connections on his own. Dozens of faculty members and hundreds of medical students came to rely on his electronic links to the outside world.

Dr. Mirhaji’s prowess with computer networks piqued the interest of university researchers, who asked him to create the infrastructure for linking clinical databases on thousands of patients spread over several hospitals.

In 2001, Dr. Mirhaji relocated from Tehran to the University of Texas Health Science Center in Houston for a fellowship in cardiology. The attacks of 9/11 soon followed, changing the course of his career. With his background in data networks and data analytics, Dr. Mirhaji developed Houston’s first public health preparedness network, which received the Department of Health and Human Service’s “Best Practice in Public Health Award” in 2002. He later received several grants to build a bioterrorism surveillance network for Houston and Harris County, TX.

No bioterror attacks hit Texas, but Dr. Mirhaji’s informatics infrastructure would serve another purpose: providing the foundation for the state’s surveillance system for natural disasters, which proved invaluable during Hurricane Katrina. “We built a system literally overnight that Houston’s health department used to support evacuees coming from New Orleans and to determine how they were faring in the various shelters,” he says. Dr. Mirhaji went on to create a surveillance system for the Harris County, TX, health department to monitor schools for outbreaks of

avian flu.

Dr. Mirhaji’s success in informatics effectively ended his ambition to become a cardiologist. “Clinical life is rewarding, but there’s an engineer in me,” says Dr. Mirhaji, who completed a doctorate in health informatics at the University of Texas in 2009. “I’m still working on clinical problems, just on a much larger scale. You should have seen the people inside the shelters after Katrina. It was awful, and no one else was in a position to help them.”

In 2012, Dr. Mirhaji moved north to be closer to his extended family and to lead Einstein’s and Montefiore’s forays into health informatics.

A Visit to the White House

Thanks to his work at Einstein and Montefiore, Dr. Mirhaji was invited to participate in President Obama’s Precision Medicine Initiative Summit, held at the White House on February 25, 2016. The summit brought together healthcare leaders from across the country to begin integrating biological, clinical, environmental and administrative data to spawn research innovations and improve the delivery of care.